Automakers and tech companies are racing to get the first self-driving car on the road.

Ford, GM, Tesla, Lyft, Google, and more all have plans to have some form of autonomous car ready for commercial use within the next five years. In fact, it’s estimated that by 2030 driverless cars could make up as much as 60% of US auto sales, according to Goldman Sachs.

While companies all have their own reasons for investing in this technology, they all agree that one of the biggest benefits of autonomous cars will be improved safety.

How, might you ask, is a car with no human driver, no steering wheel, and no brake pedal safer than the cars we currently drive?

Well, it all comes down to the tech used to enable autonomous vehicles.

The sensors

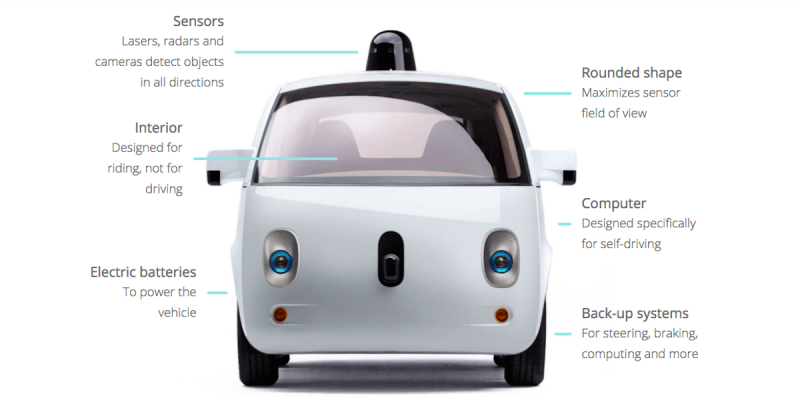

Driverless cars are designed to have almost a superhuman-like ability to recognize the world around them. This is because they use loads of sensors to gather tons of data about their environment so that they can seamlessly operate in a constantly changing environment.

Essentially, companies developing autonomous vehicles are really just trying to replicate how a human drives using these sensors.

"As a human you have senses, you have your eyes, you have your ears, and sometimes you have the sense of touch, you are feeling the road. So those are your inputs and then those senses feed into your brain and your brain makes a decision on how to control your feet and your hands in terms of braking and pressing the gas and steering. So on an autonomous car you have to replace those senses," Danny Shapiro, senior director of Nvidia's automotive business unit, told Business Insider.

Some of the sensors used on autonomous cars include cameras, radar, lasers, and ultrasonic sensors. GPS and mapping technology are also used to help the car determine it's position.

All of these have different strengths and weaknesses, but essentially they enable a lot of data to flow into the car.

The brain

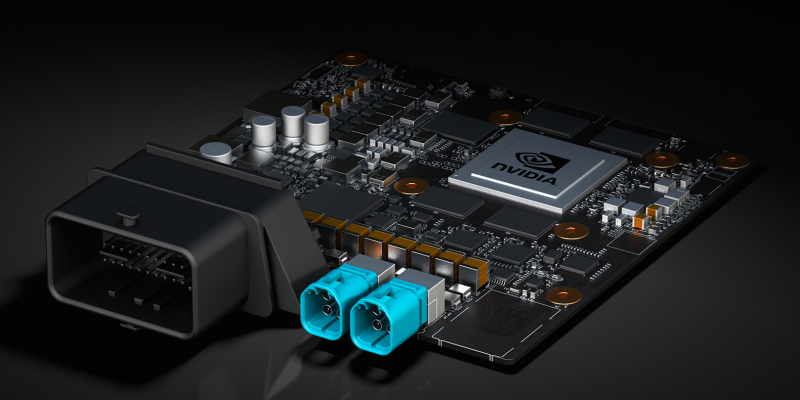

All of this collected data is then fed into the car's computer system, or "brain," so to speak, and is processed so that the car can make decisions.

One of the leading companies building the brains for these cars is the chipmaker Nvidia. In fact, Tesla's Autopilot system uses Nvidia's Drive PX2, which is the company's newest computer system for autonomous cars.

Drive PX2 is a powerful computer platform about the size of a license plate that uses a variety of Nvidia's chips and software to take all of the data coming in from the sensors on an autonomous car to build a three-dimensional model of the car's environment, Shapiro said.

"In the brain of the car, it almost looks like a video game. We are essentially recreating the world in a virtual 3D space," he said.

To do this, Nvidia and other companies developing driverless tech use a little something called machine learning.

How it learns

Machine learning is a way of teaching algorithms by example or experience and companies are using it for all kinds of things these days. For example, Netflix and Amazon both use machine learning to make recommendations based on what you have watched or purchased in the past.

So to train a self-driving car, you would first drive the car hundreds of miles to collect sensor data. You would then process that data in a data center identifying frame by frame what each object is.

"Initially, the computer doesn't know anything. We have to teach it. And so what we want to do is if we want to teach it to recognize pedestrians, we would feed it pictures of pedestrians. But what we can do is feed it millions pictures of pedestrians because pedestrians look different," Shapiro said.

"The more data we feed it the more vocabulary it has and the more it can recognize what a pedestrian is. And we do the same thing with bicyclists, cars, trucks, and we do it at all times of day and different weather conditions. So again, essentially it has this infinite capability to build up a memory and understanding of what all of these different types of things could encounter would look like," he said.

Listen to Cadie Thompson talk about how self-driving cars see the world. From season 2 of Codebreaker, the podcast from Marketplace and Business Insider. Click here for full episodes

It might sound complicated, but when you think about it, it's not that different from how humans learn.

When you were born, you didn't know what anything was. But your parents or whoever raised you repeatedly pointed things out so that you could identify different objects and people.

Superhuman driving skills

Once a computer model is created, then it's loaded into the car's brain and hooked up to the rest of the car's sensors to create real-world model of the car's environment.

The car uses this model to make decisions about how it should respond in different situations. And because the car has sensors all around it, it has access to a lot more data than a human driver to help it make those decisions.

"There are insightful factors that get factored in. For example, if you are driving along and there's a parked car with nobody in it, the vehicle will proceed next to that car," Shapiro said.

"But if it sees the door is slightly open and there is somebody in it, well the expectation is that the door will open at any moment and someone will to try and get out of that car. So at that point, when the car senses that, it's either going to slow down, or switch lanes if it can, and proceed with caution. And because it has a full 360 degrees view around the car, it can be tracking multiple objects, with much greater things happening, with much greater accuracy than any human."