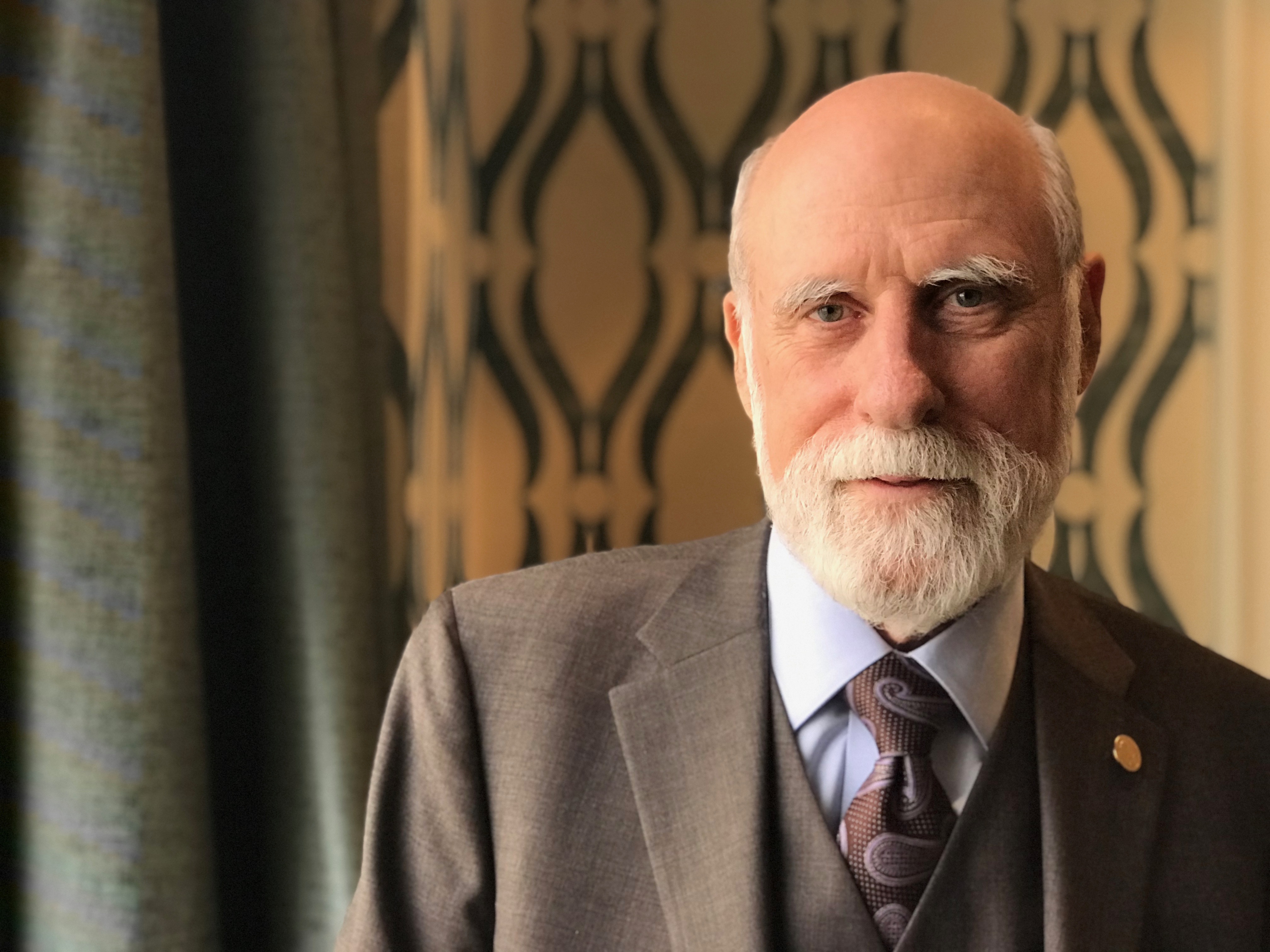

- Vint Cerf, one of the fathers of the internet, this week defended Google’s work on Project Maven – its controversial pilot program with the Pentagon.

- Employee objections to the work, which involved developing artificial-intelligence technologies for use by the Defense Department, were based on a misunderstanding of the nature of the work, said Cerf, who is Google’s chief internet evangelist.

- But the company could have been more transparent about what it was doing, he acknowledged.

If you ask Vint Cerf, the protests at Google about its contract to help develop artificial-intelligence technologies for the military can be chalked up to a misunderstanding.

Employees that objected to the company’s work for the Defense Department didn’t really understand the nature of the work or its benefits, Cerf, a vice president at the search giant and its chief internet evangelist, told Business Insider this week at the “Our People-Centered Digital Future” conference in San Jose.

Although some Google employees expressed concern that the technology could be used by the military to kill people, that’s not what the project was about, he said.

“People were leaping to conclusions about the purpose of those contracts and the objectives of the contracts that I don’t think were justifiable,” said Cerf, chairman of the People Centered Internet coalition, which helped organize the conference. PCI is an international group dedicated to ensuring “that the Internet continues to improve people’s lives and livelihoods and that the Internet is a positive force for good.”

A quiet contract led to mass protests

Google quietly signed a contract to provide its AI technology to the military as part of the latter's Project Maven effort in September 2017. Under the contract, the company was due to help the Defense Deparment use machine-learning algorithms to analyze drone footage.

When employees learned of the effort in February, many of them were outraged. Many expressed concern that even if the technology wasn't initially developed for lethal purposes, it could eventually be used for that. More than 4,000 signed a letter demanding that the company cancel the contract and promise to never build "warfare technology." About a dozen also resigned from the company in protest over it.

The company announced in June that it wouldn't renew the contract when it expires next year, and company CEO Sundar Pichai announced a set of principles that would guide its AI development and applications. Among other things, he said the company wouldn't "design or deploy" AI that would be used for weapons or for illegitimate surveillance.

Read this: Google just released a set of ethical principles about how it will use AI technology

Cerf said the contract was about "situational awareness"

To Cerf, though, the brouhaha was overblown. Employee concerns about the use of Google's AI technology by the military were generally unfounded, he said.

As he understands it, the purpose of the contract was to develop technology to automatically detect things that might be harmful, such as people putting in place improvised explosive devices. It wasn't designed to automatically detect and target individuals, he said.

"That particular project, as far as I know, was not to do that. It was a situational awareness application," said Cerf, who's widely considered to be one of the fathers of the internet, because he helped develop its underlying data transfer protocols. "How can I recognize what's going on around me in order to protect against [it] ... Can I automatically discover things going on in my environment that might be harmful?"

But according to Gizmodo, which broke the initial report on Google's Project Maven contract, the company had hoped the effort would lead to much more work for it with the military and with US intelligence agencies. As part of the project, it was already developing a system for the military that could be used to surveil entire cities, Gizmodo reported.

The military does more than fight wars

More broadly, Cerf said, employees misunderstood the breadth of the military's mission and technology efforts. The Defense Department does more than just fight wars, he noted. Among other things, the military has helped develop technologies that have proven to be invaluable in the private sector, he said, noting his own work in the 1970s and 1980s developing internet technology on behalf of the Defense Advanced Projects Research Agency.

"There is a lot of misunderstanding about the positive benefits of working with [and] in the public sector, the military being a part of that," he said.

Google declined to publicize its work on Project Maven and, when it came to light, downplayed its involvement, according to published reports. The company initially told Gizmodo that its work on the project only consisted of providing the military access to the same kind of open source machine-learning tools it provides to other corporate and institutional customers of its cloud services. But the company had actually assigned more than 10 employees to the project and was actively working to customize its AI technology for the military, according to Gizmodo.

Cerf acknowledged Google may have some responsibility for the misapprehension of its employees.

"There probably was not enough transparency" regarding its Project Maven work, he said.

- Read more about the Google and the ethical issues surrounding AI:

- Amazon's cloud CEO just pooh-poohed employee concerns about selling its facial-recognition software to ICE and law enforcement

- It's become increasingly clear that Alphabet, Google's parent company, needs new leadership

- Most companies using AI say their No.1 fear is hackers hijacking the technology, according to a new survey that found attacks are already happening

- Google's recent behavior shows the troubling reality of an internet superpower that abandoned its vow to not 'be evil'