- On March 18 one of Uber’s self-driving cars killed a pedestrian, the first pedestrian fatality involving a self-driving car.

- As Uber prepares to return its cars to the roads, Business Insider spoke to current and former employees and viewed internal documents.

- These employees and documents described vast dysfunctionality in Uber’s Advanced Technologies Group, with rampant infighting and pressure to catch up to competitors, issues that these employees say continue to this day.

- Sources say engineers were pressured to “tune” the self-driving car for a smoother ride in preparation of a big, planned year-end demonstration of their progress. But that meant not allowing the car to respond to everything it saw, real or not.

- “This could have killed a toddler … That’s the accident that didn’t happen but could have,” one employee told Business Insider.

At 9:58 p.m. on a nearly moonless Sunday night, 49-year-old Elaine Herzberg stepped into a busy section of Mill Road in Tempe, Arizona, pushing her pink bicycle. A few seconds later, one of Uber’s self-driving Volvo SUVs ran into her at 39 MPH and killed her.

Inside the car, safety driver Rafaela Vasquez was working alone, as all drivers did at the time. She kept taking her eyes off the road, but not to enter data into an iPad app as her job required. She was streaming an episode of “The Voice” over Hulu on her phone. She looked up again just as the car hit Herzberg, grabbed the wheel and gasped.

These are the details of the March 18 incident according to the preliminary National Safety Transportation Board (NSTB) report, police reports and a video of the incident released by police.

The incident shocked people inside Uber’s Advanced Technologies Group, the company’s 1,100-person self-driving unit. Employees shared their horror in chat rooms and in the halls, several employees told Business Insider.

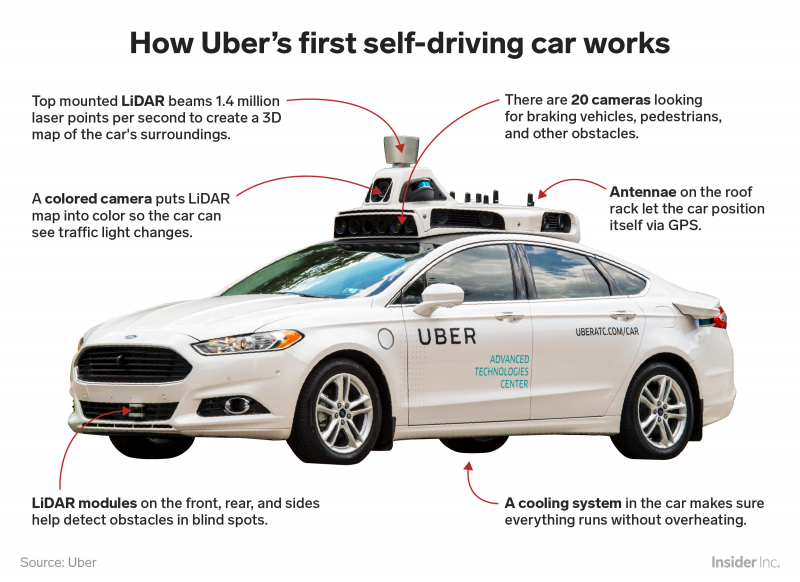

Self-driving cars are promoted as being safer than humans, able to see and react with the speed of a computer. But one of their cars had been involved in the first-ever self-driving fatality of a pedestrian.

When employees learned that Herzberg was a jaywalking homeless woman and that her blood tested positive for meth and pot, many seized on those details to explain away the tragedy, several employees told us. (Uber has since settled a lawsuit from her daughter and husband.)

When employees discovered Vasquez was watching Hulu, and was a convicted felon before Uber hired her, they vilified her.

"People were blaming everything on her," one employee said.

But insiders tell us that Vasquez and Herzberg were not the only factors in this death. There was a third party that deserves some blame, they say: the car itself, and a laundry list of questionable decisions made by the people who built it.

Uber's car had actually spotted Herzberg six seconds before it hit her, and a second before impact, it knew it needed to brake hard, the NSTB reported.

But it didn't.

It couldn't.

Its creators had forbidden the car from slamming on the brakes in an emergency maneuver, even if it detected "a squishy thing" - Uber's term for a human or an animal, sources told Business Insider. And the NSTB report said that Uber had deliberately disabled self-driving braking.

The car's creators had also disabled the Volvo's own emergency braking factory settings, the report found and insiders confirmed to Business Insider.

(Read more: Uber lost nearly $1 billion last quarter as the ride-hailing giant's growth slows)

According to emails seen by Business Insider, they had even tinkered with the car's ability to swerve.

Much has been written about the death of Elaine Herzberg, most of it focused on the failings of the driver. But, until now, not much has been revealed about why engineers and senior leaders turned off the car's ability to stop itself.

Insiders told us that it was a result of chaos inside the organization, and may have been motivated, at least in part, to please the boss.

Business Insider spoke to seven current and former employees of Uber's self-driving car unit employed during the time of the accident and in the months succeeding it. We viewed internal emails, meeting notes and other documents relating to the self-driving car program and the incident.

We learned that these insiders allege that, despite many warnings about the car's safety, the senior leadership team had a pressing concern in months before the accident: impressing Uber's new CEO Dara Khosrowshahi with a demo ride that gave him a pleasant "ride experience."

These employees and documents paint a picture of:

- Leadership that feared Khosrowshahi was contemplating canceling the project, ending their very high paying jobs, so they wanted to show him progress.

- Engineers allegedly making dangerous trade-offs with safety as they were told to create a smooth riding experience.

- Incentives and priorities that pressured teams to move fast, claim progress, and please a new CEO.

- A series of warning signals that were either ignored or not taken seriously enough.

- Vast dysfunctionality in Uber's Advanced Technologies Group, with rampant infighting so that no one seemed to know what others were doing.

After the accident on March 18, Uber grounded the fleet. Although the NSTB's final report has not yet been released, (it's expected in April, sources tell us), the company is already making plans to put its cars back on the public roads, according to documents viewed by Business Insider. Uber is trying to catch up to competitors like Google's Waymo and GM, who never halted their road tests.

'Could have killed a toddler'

To some Uber insiders, Herzberg's death was the tragic but unsurprising consequence of a self-driving car that should not have been driving on the open road at night and perhaps not at all.

Uber's Advanced Technologies Group, the unit building self-driving vehicles, is currently spending $600 million a year, sources familiar with the matter tell Business Insider, although others have said the budget has been closer to $1 billion a year. And it remains woefully behind the self-driving car market leaders in every measurable way although Uber tells us that the company has "every confidence in the work" the team is doing to get back on track.

At the time of the accident, engineers knew the car's self-driving software was immature and having trouble recognizing or predicting the paths of a wide variety of objects, including pedestrians, in various circumstances, according to all the employees we talked to.

For instance, the car was poorly equipped for "near-range sensing" so it wasn't always detecting objects that were within a couple of meters of it, two people confirmed to Business Insider.

"This could have killed a toddler in a parking lot. That was our scenario. That's the accident that didn't happen, but could have," one software developer said.

Every week, software team leaders were briefed on hundreds of problems, ranging from minor to serious, people told us, and the issues weren't always easy to fix.

For example, the tree branches.

For weeks on end, during a regular "triage" meeting where issues were prioritized by vice president of software Jon Thomason, tree branches kept coming up, one former engineer told us.

Tree branches create shadows in the road that the car sometimes thought were physical obstacles, multiple people told us.

Uber's software "would classify them as objects that are actually moving and the cars would do something stupid, like stop or call for remote assistance," one engineer explained. "Or the software might crash and get out of autonomy mode. This was a common issue that we were trying to fix."

Thomason grew irate at one of these meetings, another engineer recalls, and demanded the problem be fixed. "This is unacceptable! We are above this! We shouldn't be getting stuck on tree branches, so go figure it out," Thomason said.

An Uber spokesperson denies that the car stops for tree branch shadows. This spokesperson said the car stops for actual tree branches in the road.

Meanwhile, another employee also said that piles of leaves could confuse the car. A third employee told us of other efforts to teach the car to recognize foliage.

Employees also said the car was not always able to predict the path of a pedestrian. And according to an email reviewed by Business Insider, the car's software didn't always know what to do when something partially blocked the lane it was driving in.

On top of all of this, a number of engineers at Uber said they believed the cars were not being thoroughly tested in safer settings. They wanted better simulation software, used more frequently.

The company started to address that concern before the accident when it hired a respected simulation engineer in February. Recently, Uber has publicly vowed to do more simulation testing when it is allowed to send its cars back on the open road again.

But before Herzberg's accident, "We just didn't invest in it. We had sh--. Our off-line testing, now called simulation, was almost non-existent, utter garbage," as one described it.

Besides simulation, another way to test a self-driving car is on a track.

But employees we spoke to described Uber's track testing efforts as disorganized with each project team doing it their own way and no one overseeing testing as a whole.

This kind of holistic oversight is another area that Uber says it is currently addressing.

Yet, even now, months after the tragedy, these employees say that rigorous, holistic safety testing remains weak. They say that the safety team has been mostly working on a "taxonomy" - in other words, a list of safety-related terms to use - and not on making sure the car performs reliably in every setting. Uber tells us that the safety team has been working on both the taxonomy and the tests itself.

Dara's ride

As employees worked, they were acutely aware of division leadership's plans to host a very important passenger: Uber's new CEO, Dara Khosrowshahi.

Khosrowshahi had taken over as Uber CEO in the summer of 2017, following a tumultuous year in which the company was battered by a string of scandals involving everything from sexual harassment allegations to reports of unsavory business practices. The self-driving car group wasn't immune, with Anthony Levandowski, its leader and star engineer, ousted in April 2017, amid accusations of IP theft.

The unit's current leader, Eric Meyhofer, took Levadowski's place just five months before Khosrowshahi was hired.

Despite Uber's massive investment in self-driving cars, its program was considered to be far behind the competition. News reports speculated that Khosrowshahi should just shut it down.

None of this was lost on Meyhofer and the senior team, who wanted to impress their new CEO with a show of progress, sources and documents said.

Plans were made to take Khosrowshahi on a demo ride sometime around April and to have a big year-end public demonstration. ATG needed to "sizzle," Meyhofer liked to say, people told us. Internally, people began talking about "Dara's ride" and "Dara's run."

The stakes were high. If ATG died it could end the leadership team's high-paying jobs. Senior engineers were making over $400,000 and directors made in the $1 million range between salary, bonus and stock options, multiple employees said.

Leadership also had their reputation at stake. They did not want to be forever be known as the ones who led Uber's much-publicized project to its death, people close to Meyhofer explained.

Internally, unit leaders geared up to pull off the "sizzle."

'Bad experiences'

As the world's largest ride-hailing company, Uber understood the need to give customers a good experience. If passengers were going to accept self-driving cars, the ride could not be the jarring experience that had made a BuzzFeed reporter car sick during a demonstration.

So, in November, a month after Khosrowshahi became their new boss, Eric Hansen, a senior member of the product team sent out a "product requirement document" that spelled out a new goal for ATG, according to an email viewed by Business Insider. (Hansen has since become the director of the product group.)

The document asked engineers to think of "rider experience metrics" and prescribed only one "bad experience" per ride for the big, year-end demonstration.

Given how immature the car's autonomous software was at the time, "that's an awfully high bar to meet," one software developer said.

Some engineers who had been focused on fixing safety-related issues were aghast. Engineers can "tune" a self-driving car to drive smoother easily enough, but with immature software, that meant not allowing the car to respond to everything it saw, real or not, sources explained. And that could be risky.

"If you put a person inside the vehicle and the chances of that person dying is 12%, you should not be discussing anything about user experience," one frustrated engineer hypothesized. "The priority should not be about a user experience but about safety."

Two days after the product team distributed the document discussing "rider experience metrics" and limiting "bad experiences," to one per ride, another email went out. This one was from several ATG engineers. It said they were turning off the car's ability to make emergency decisions on its own like slamming on the brakes or swerving hard.

Their rationale was safety.

"These emergency actions have real-world risk if the VO ["vehicle operator" or safety driver] does not take over in time and other drivers are not attentive, so it is better to suppress plans with emergency actions in online operation," the email read.

In other words, such quick moves can startle other drivers on the road and if there was a real threat, the safety driver would have already have taken over, they reasoned. So they resolved to limit the car's actions and rely wholly on the safety driver's alertness.

The sub-context was clear: the car's software wasn't good enough to make emergency decisions. And, one employee pointed out to us, by restricting the car to gentler responses, it might also produce a smoother ride.

A few weeks later, they gave the car back more of its ability to swerve but did not return its ability to brake hard.

Final warning

The final warning sign came just a couple of days before the tragedy.

One of the lead safety drivers sent an email to Meyhofer laying out a long list of grievances about the mismanagement and working conditions of the safety driver program.

"The drivers felt they were not being utilized well. That they were being asked to drive around in circles but that their feedback was not changing anything," said one former engineer of Uber's self-driving car unit who was familiar with the driver program.

Drivers complained about long hours and not enough communication on what they should be testing and watching for. But the big complaint was the decision a few months earlier to start using one driver instead of two. That choice instantly gave ATG access to more drivers so the company could log more mileage without having to hire double the drivers.

The second driver used to be responsible for logging the car's issues into an iPad app and dealing with the car's requests to identify objects on the road.

Now one person had to do everything, employees told us.

This not only eliminated the safety/redundancy of having two drivers, it required the active driver to do the logging and tagging, not keeping their eyes on the road, which some inside the company believed was not safe.

It was like distracted driving, "like watching their cell phone 10-15% of the time," said one software engineer.

If Meyhofer took the angry email from that safety driver seriously - and multiple people told us he'd been reacting with frustration to people he viewed as naysayers - he didn't have a chance to act on it before the tragedy that benched the cars. Uber now says the self-driving car unit plans to return to the two driver system when it sends its cars on the road again.

A 'toxic culture'

Some employees believe that ATG's leaders were pushing for a "ride experience" to make Khosrowshahi believe the car was farther along than it was during that planned demo ride.

But others say mistakes were less conscious than that.

One engineer who worked closely with Meyhofer said the real problem was that under his leadership there was "poor communication, with a bunch of teams duplicating effort," adding, "One group doesn't know what the other is doing."

This engineer said one team would not know the other had disabled a feature, or that a feature didn't pass on the track test, or that drivers were saying a car performed badly.

"They only know their piece. You get this domino effect. All these things create an unsafe system," this person said.

Everyone we talked to also described the unit as a "toxic culture" under Meyhofer.

They talked of impossible workloads, backstabbing teammates and poor management.

According to documents viewed by Business Insider, leadership was aware of this reputation, with Thomason confessing to other leaders in a September meeting, "We repeatedly hear that ATG is not a fun place to work" and admitted such feedback was "baffling" to him.

While some people disliked Meyhofer and thought that he could be insecure to the point of vindictiveness if he was challenged, others described him as a nice guy with good intentions who was in over his head.

"He is a hardware guy. He runs a tight hardware ship," one engineer and former employee who worked closely with him told us. But the brains of a self-driving car is software, this person said.

This person says that meant Meyhofer lacked "the understanding and know-how of the software space."

"Imagine a leader that can't weigh two options and decide the best course of action," described this engineer.

Everyone we spoke with agreed that part of the problem is that Uber's self-driving car unit was staffed by teams in two very different locations with two very different engineering cultures.

There was a team in Pittsburgh, anchored by folks from Carnegie Mellon's National Robotics Engineering Center (of which Meyhofer was an alum) and there were San Francisco-based teams.

The San Francisco people complained that the NREC folks were a bunch of academics with no real-world, product-building experience, who retained their high paying jobs by virtue of being Meyhofer's cronies. The NREC team saw the San Francisco engineers as whiny and ungrateful, people described.

On top of the infighting, there was a bonus structure that rewarded some employees for speedily hitting milestones, careful testing or not, multiple sources described.

"At ATG, the attitude is I will do whatever it takes and I will get this huge bonus," one former engineer said. "I swear that everything that drives bad behaviors was the bonus structure."

In its safety report, Uber says some of the ways ATG was measuring the progress of its program before the accident created "perverse incentives."

Specifically, ATG, like everyone in the self-driving car industry, believed that the more miles a car drove itself without help from a human, the smarter it was. But the whole industry now realized this is an overly simplistic way to measure how well a car drives. If management is too focused on that metric, employees may feel pressured not to take control of the car, even when they should.

Uber has since vowed to find other ways to measure improvement and tells us that milestone-based bonuses were limited to just a few people and have recently been eliminated.

Internally, some remain frustrated with the self-driving unit.

"Within ATG, we refused to take responsibility. They blamed it on the homeless lady, the Latina with a criminal record driving the car, even though we all knew Perception was broken," one software developer said, referring to the software called "Perception" that allows the car to interpret the data its sensors collect. "But our car hit a person. No one inside ATG said, 'We did something wrong and we should change our behavior.'"

The employees we talked to note that most of the same leadership team remains in place under Meyhofer, and some of them, like Hansen, have even been promoted. They allege that the unit's underlying culture hasn't really changed.

An Uber spokesperson says the company has reviewed its safety procedures, made many changes already and is promising to make many more. It described them all in a series of documents the company published this month, as it ramps up to hit the road again soon.

"Right now the entire team is focused on safely and responsibly returning to the road in self-driving mode," the spokesperson told us. "We have every confidence in the work they are doing to get us there. We recently took an important step forward with the release of our Voluntary Safety Self-Assessment. Our team remains committed to implementing key safety improvements, and we intend to resume on-the-road self-driving testing only when these improvements have been implemented and we have received authorization from the Pennsylvania Department of Transportation."

The Uber CEO's big ride-along never happened because of the March accident - at least not on any public roads. But that may change.

Meyhofer and most of his closest lieutenants remain in charge of ATG and want get their self-driving cars on the road again as soon as possible, before the end of the year, maybe even later this month, employees say. In a September meeting of Meyhofer's senior leadership, the team was told: "We need to demonstrate something very publicly by 2019," according to documents seen by Business Insider.