- The AI war is going to be really, really, really expensive.

- Google DeepMind boss Demis Hassabis suggests Google will spend $100 billion-plus on AI development.

- His prediction signals just how much money tech giants will have to spend to make AI smarter.

Winning the AI wars won't come cheap. Just ask Demis Hassabis.

To kick off this year's TED conference, Google's AI boss gave an audience in Vancouver a reality check on Monday by offering his best guess on how much the search giant will spend on developing AI: more than $100 billion.

Hassabis, who leads the famed research lab DeepMind within Google, and is arguably the single most important figure at the center of Alphabet's AI plans, shared the astronomical number in response to a question about what the competition was up to.

According to a report from The Information last month, Microsoft and OpenAI have been drawing up plans to create a $100 billion supercomputer called "Stargate" containing "millions of specialized server chips" to power the ChatGPT-maker's AI.

Naturally, then, when Hassabis was asked about his rivals' rumored supercomputer and its cost, he was quick to note that Google's spend could top that: "We don't talk about our specific numbers, but I think we're investing more than that over time."

Though the generative AI boom has already triggered a huge surge of investment — AI startups alone raised almost $50 billion last year, per Crunchbase data — Hassabis' comments signal that competition to lead the AI sector is going to get a lot more expensive.

That's especially the case for companies like Google, Microsoft, and OpenAI, which are all engaged in an intense battle to emerge as the first to claim the development of artificial general intelligence, AI with the capacity to match human reasoning and ingenuity.

Chunky chips

Still, the idea that one company could spend more than $100 billion on a single technology that some think could be overhyped is eye-opening.

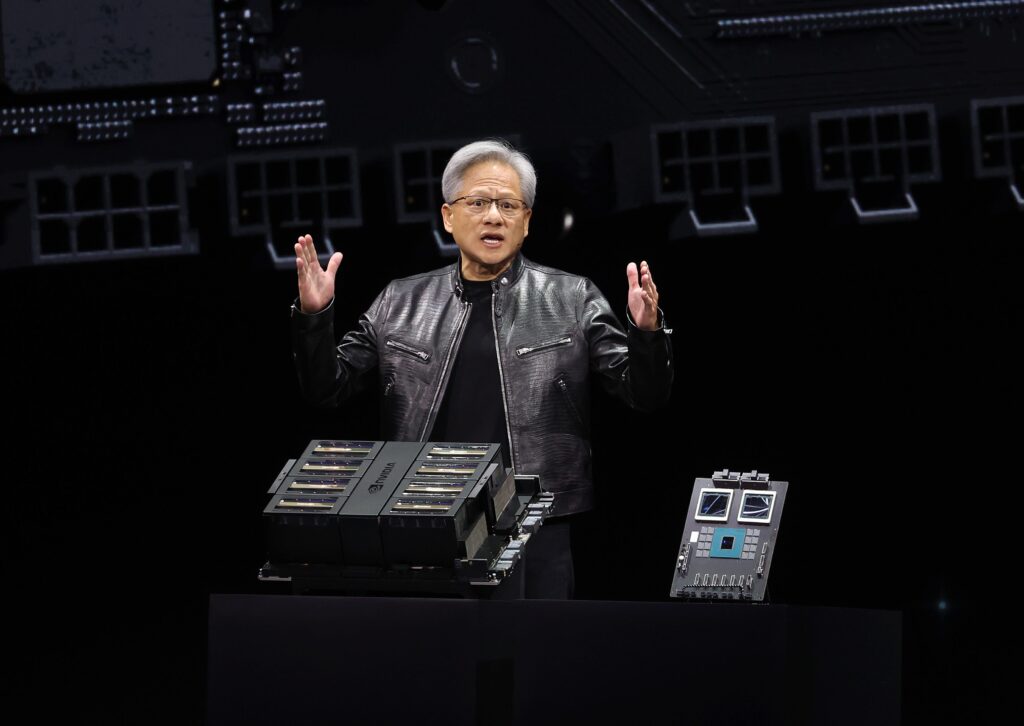

It's worth considering where that spending could go. For starters, a big chunk of development cost will be on chips.

They make for one of the most expensive purchases for companies invested in the race to develop smarter AI. Simply put, the more chips you have, the more computing power available to train AI models on greater volumes of data.

Companies working on large language models, like Google's Gemini and OpenAI's GPT-4 Turbo, have depended significantly on chips from third parties like Nvidia. But they're increasingly trying to design their own.

The general business of training models is getting more expensive, too.

Stanford University's annual AI index report, published this week, notes that the "training costs of state-of-the-art AI models have reached unprecedented levels."

It noted that OpenAI's GPT-4 used "an estimated $78 million worth of compute to train," versus the $4.3 million that was used to train GPT-3 in 2020. Google's Gemini Ultra, meanwhile, cost $191 million to train. The original technology behind AI models cost about $900 to train in 2017.

The report also noted that "there is a direct correlation between the training costs of AI models and their computational requirements," so if AGI is the end goal, the cost is only likely to spiral.