Facebook is facing increasing criticism over its role in the 2016 US presidential election because it allowed propaganda lies disguised as news stories to spread on the social-media site unchecked.

The spreading of false information during the election cycle was so bad that President Barack Obama called Facebook a “dust cloud of nonsense.”

And Business Insider’s Alyson Shontell called Facebook CEO Mark Zuckerberg’s reaction to this criticism “tone-deaf.” His public stance is that fake news is such a small percentage of the stuff shared on Facebook that it couldn’t have had an impact. This even while Facebook has officially vowed to do better and insisted that ferreting out the real news from the lies is a difficult technical problem.

Just how hard of a problem is it for an algorithm to determine real news from lies?

Not that hard.

During a hackathon at Princeton University, four college students created one in the form of a Chrome browser extension in just 36 hours. They named their project "FiB: Stop living a lie."

The students are Nabanita De, a second-year master's student in computer science student at the University of Massachusetts at Amherst; Anant Goel, a freshman at Purdue University; Mark Craft, a sophomore at the University of Illinois at Urbana-Champaign; and Qinglin Chen, a sophomore also at the University of Illinois at Urbana-Champaign.

Their News Feed authenticity checker works like this, De tells us:

"It classifies every post, be it pictures (Twitter snapshots), adult content pictures, fake links, malware links, fake news links as verified or non-verified using artificial intelligence.

"For links, we take into account the website's reputation, also query it against malware and phishing websites database and also take the content, search it on Google/Bing, retrieve searches with high confidence and summarize that link and show to the user. For pictures like Twitter snapshots, we convert the image to text, use the usernames mentioned in the tweet, to get all tweets of the user and check if current tweet was ever posted by the user."

The browser plug-in then adds a little tag in the corner that says whether the story is verified.

For instance, it discovered that this news story promising that pot cures cancer was fake, so it noted that the story was "not verified."

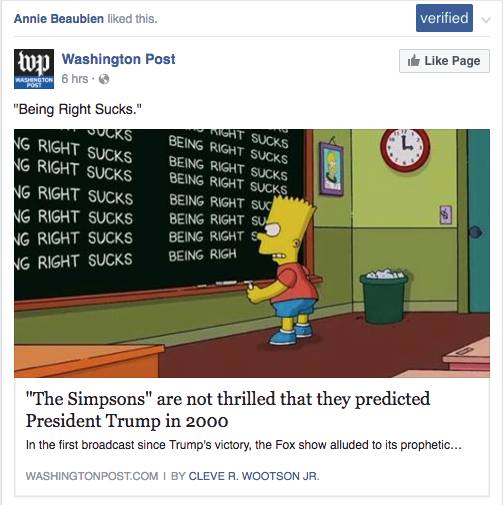

But this news story about the Simpsons being bummed that the show predicted the election results? That was real and was tagged "verified."

The students have released their extension as an open-source project, so any developer with the know-how can install it and tweak it.

A Chrome plug-in that labels fake news obviously isn't the total solution for Facebook to police itself. Ideally, Facebook will remove fake stuff completely, not just add a tiny, easy-to-miss tag that requires a browser extension.

But the students show that algorithms can be built to determine within reasonable certainty which news is true and which isn't and that something can be done to put that information in front of readers as they consider clicking.

Facebook, by the way, was one of the companies sponsoring this hackathon event.

Word is that many Facebook employees are so upset about this situation that a group of renegade employees inside the company is taking it upon themselves to figure out how to fix this issue, BuzzFeed reports. Maybe FiB will give them a head start.