- Lensa is facing criticism since the AI app exploded in popularity at the end of 2022.

- The app faces claims of sexualizing and racializing avatars and collecting personal data.

- Here are some of the biggest concerns facing Lensa and its AI, including stealing from artists.

Open any social-media platform and you’ll be bombarded with images of friends and internet acquaintances transformed into artsy digital avatars.

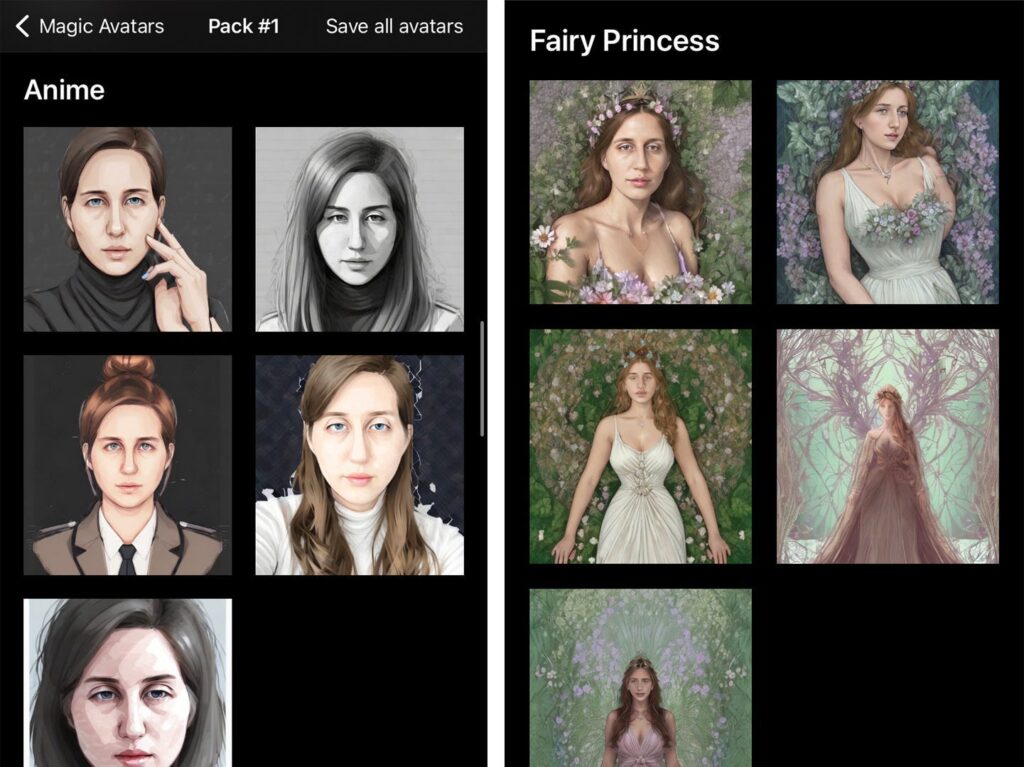

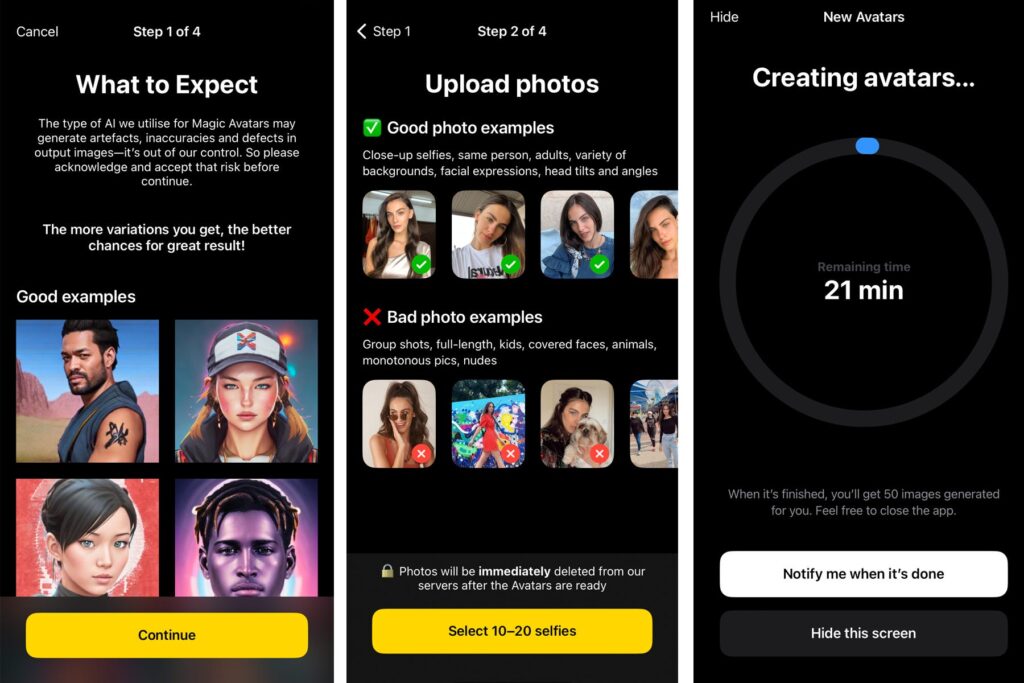

The Lensa app is behind those avatars. Prisma Labs developed it in 2018 as a photo-editing tool, and its “Magic Avatar” feature debuted in late November 2022. It then skyrocketed, counting nearly 6 million downloads in December, according to Statista.

The fad even spurred new AI filters on TikTok and led to copycat apps. I tried Lensa in December and wrote about it as the app exploded. At the time, it seemed like just a fun app.

But since then, consumers have voiced concerns from intellectual property to hyper-sexualization.

Claims of sexualization and racialization

Though Lensa’s rules note “no nudes” and “no kids, adults only,” over the past few weeks, users have taken to social media to note that several of their avatars seemingly had “huge boobs” and that the app would “give you a boob job.”

—Lexi ⁷🤔 (@lexinlindsey) December 9, 2022

Melissa Heikkilä, a reporter for MIT Technology Review, wrote in an article in December that 16 of the 100 avatars she received were topless, while an additional 14 put her in “extremely skimpy clothes and overtly sexualized poses.”

She’s not alone. In a survey Within Health, a digital service that assists with mental health and eating disorders, conducted in December, 80% of respondents said they felt Lensa sexualized their images.

According to Heikkilä, part of the issue is Lensa’s use of Stable Diffusion, an open-source AI model built from data the program pulls from images on the internet — a source of chronically crude and problematic photos — that appear to be informing the app’s results.

Others on social media said their images were “whitewashed:” their skin appeared lighter than it did in submitted photos.

—Melissa Heikkilä (@Melissahei) December 13, 2022

In a report for Wired, Olivia Snow said that as an experiment, she uploaded photos of herself as a child, and the results transformed her face into “seductive looks and poses” and in one set, “a bare back, tousled hair, an avatar with my childlike face holding a leaf between her naked adult’s breasts.”

Prisma Labs and Lensa did not respond to Insider’s request to comment prior to publication. However, Lensa states in its privacy policy — updated on December 15, 2022 in response to earlier backlash — the company acknowledges the possibility of receiving “inappropriate” content.

“We do our best to moderate the parameters of the Stable Diffusion model, however, it is still possible that you may encounter content that you may see as inappropriate for you,” the policy states.

Allegations of stealing from artists

Just as quickly as Lensa avatars became ubiquitous online, allegations of theft and warnings from artists also emerged.

“If you’ve recently been playing around with the Lensa App to make AI art ‘magic avatars’ please know that these images are created with stolen art through the Stable Diffusion model,” Megan Rae Schroeder, an artist, wrote in a viral Twitter thread.

Schroeder further claimed Lensa is using a “legal loophole to squeeze out artists from the process” by operating its technology through “nonprofit means” that dodge licensing fees.

—meg rae (@megraeart) December 2, 2022

Eliana Torres, an intellectual-property attorney for Nixon Peabody, confirmed that the legality around Lensa is murky because US copyright law “treats AI as a tool or a machine, rather than as an author or a creator.”

“However, the question of authorship and ownership of works created by AI is still an open question and is the subject of ongoing legal and policy debates,” Torres said.

The law community “would welcome additional guidance from policymakers and courts when it comes to protecting consumers in connection with AI,” Torres said.

Meanwhile, other artists like the painter Agnieszka Pilat, are less concerned about its long-term impact — she anticipates Lensa will fall as quickly as it rose.

“The flood of Lensa avatars feel cheap and overwhelming,” Pilat said in a statement shared with Insider. “The public’s juvenile self-absorption, the ultimate Narcissus moment, the me society, that’s what is at play here.”

Concern over data collection and privacy

Similar to issues surrounding the 2020 launch of FaceApp, a Russian program that also uses AI technology, some users are wary about how their biometric data and likenesses may be used.

Lensa claimed in its updated policy that it does “not use your personal data to generally train and/or create our separate artificial intelligence/products.”

“Please note that we always delete all metadata that may be associated with your photos and (or) videos by default (including, for example, geotags) before temporarily storing them to our systems,” the policy says.

Still, experts said to use caution when using apps like Lensa.

“We always have to be aware when our biometric data is being used for any purpose,” David Leslie, a director of ethics at The Alan Turing Institute and a professor at Queen Mary University told Wired. “This is sensitive data. We should be extra cautious with how that data is being used.”