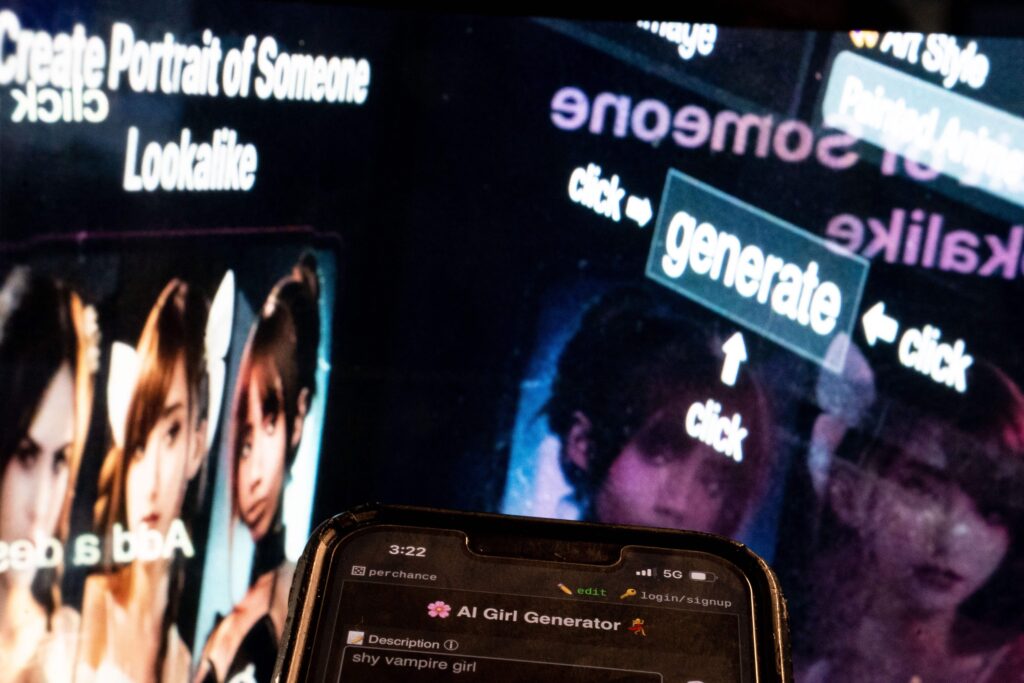

- Meta's oversight board is investigating two cases of AI-generated images of female public figures.

- One case involves a pornographic deepfake of a female American figure on Facebook.

- The board will review Meta's policies on how to handle explicit AI images.

Meta's oversight board announced on Tuesday it will investigate the company's policies around explicit AI deepfakes of women.

The tech giant's board said it's looking into two specific cases, one on Instagram and one on Facebook.

One of the cases involves an AI-generated image resembling a nude female American public figure with a man groping her. The figure was named in the caption and posted in a Facebook group for AI creations.

Meta's oversight board didn't specify which female public figure was used in the AI deepfake.

The oversight board said a different user had already posted the AI-generated nude before it was shared in the Facebook group. The explicit photo was removed for violating the Bullying and Harassment policy for "derogatory sexualized photoshop or drawings."

The user who posted the photo appealed the removal, which was rejected by the automated system. The user then appealed to the Board.

Pornographic deepfakes of Taylor Swift on X caused an internet stir in January. Many of the images showed the pop star engaging in sexual acts in football stadiums.

One post uploaded by a verified user on X, formerly Twitter, attracted over 45 million views before it was taken down by moderators about 17 hours later.

The other investigation involves an AI-generated image of a nude woman that resembles a public figure from India. The content was posted on an Instagram account that only shares AI-generated images of Indian women.

In this case, Meta failed to delete the content after it was reported twice. The user appealed to the Board and Meta found its decision to keep the content was an error. It then removed the post for violating the Bullying and Harassment Community Standard.

The Board said it selected these cases to see whether Meta is effectively addressing explicit AI-generated imagery.

Politicians, public figures, and business leaders have spoken out about deepfakes and the risks they pose.

White House press secretary Karine Jean-Pierre previously said lax enforcement of deepfakes disproportionately impacts women as well as girls "who are the overwhelming targets."

Jean-Pierre also said that while legislation should play a role in tackling this issue, social media platforms should be banning harmful AI content on their own.

Microsoft CEO Satya Nadella called the nude deepfakes of Taylor Swift "alarming and terrible" and called for more protection from these images.

Forget just porn; deepfakes could also be used to influence elections. Already, AI-generated calls faking messages from President Joe Biden turned up in the primaries.

Meta's oversight board wants the public to weigh in on the two cases. It asked for comments suggesting strategies on how to address this issue and feedback on its severity.

The oversight board will deliberate on the decisions over the next few weeks before posting the outcome, it said in the announcement. While the board's recommendations aren't binding, Meta has to respond to them within 60 days.